AI at the crossroads: Lessons from the frontlines of ethical innovation

How Bonterra’s leaders are redefining what responsible AI looks like for social good.

It’s easy to talk about AI. It’s harder to use it responsibly.

That’s what sparked Bonterra’s AI Town Hall, an honest conversation among product, data, and security leaders about what it takes to build AI that advances nonprofit missions without compromising trust.

One of the central ideas presented early on was clear: ethics can’t be an excuse for inaction, and fear can’t be a substitute for preparation. Responsible AI isn’t about waiting until everything feels “safe.” It’s about moving forward thoughtfully, transparently, and with intention.

That theme echoed throughout the discussion. Across audience questions, live polls, and internal debate, one takeaway remained constant: innovation and accountability must move in parallel. For nonprofits to truly benefit from AI, they need both the confidence to explore what’s possible and the guardrails to protect what matters most.

1. From fear to framework

When new technology feels uncertain, it’s tempting to freeze to wait for perfect rules, regulations, and frameworks before moving forward. But one of the clearest themes from the Town Hall was this: waiting for absolute clarity can create more risk than taking thoughtful first steps.

The message shared was simple and consistent: responsible AI isn’t discovered by standing still; it’s shaped through testing, learning, and iteration.

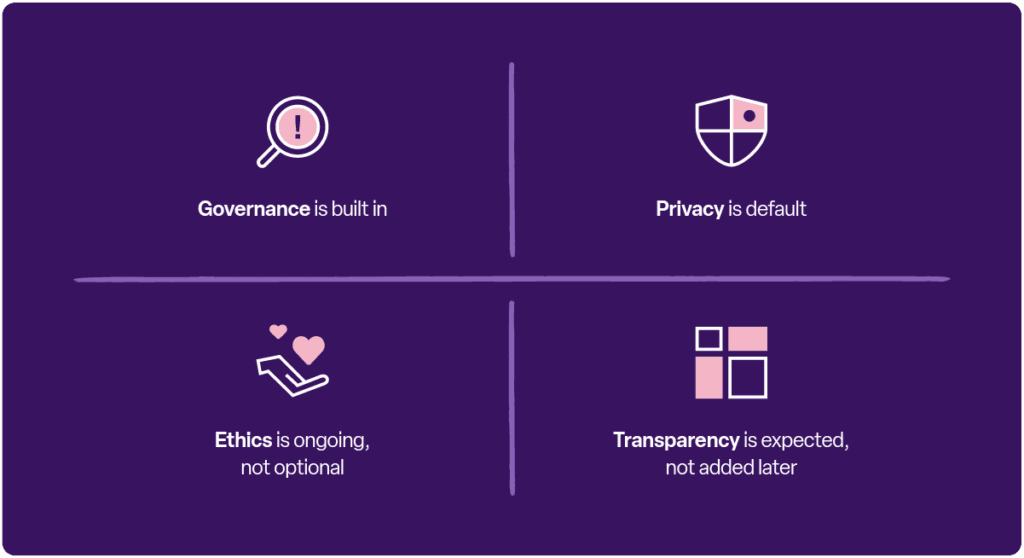

Readiness isn’t about perfection. It’s about experimentation with accountability built in. At Bonterra, that principle led to a structured governance model that includes an AI Executive Committee and an AI Working Committee, two groups that meet regularly to evaluate every initiative through the lenses of privacy, fairness, and compliance.

Every model, dataset, and AI-generated output is reviewed for transparency, accuracy, and risk before deployment. This framework aligns with global standards like ISO 42001, SOC 2, and NIST SP 800-53, delivering AI that is both enterprise-grade and mission-driven.

2. Collaboration, not delegation

The Town Hall reinforced a crucial mindset shift: AI doesn’t work instead of us; it works alongside us.

The core message was clear: AI can accelerate progress, but humans ensure relevance, context, and impact. The real power comes from partnership, not replacement.

Attendees were reminded that AI isn’t the hero of this story; people are. The best outcomes happen when technology handles the lift, while humans lead the direction, decisions, and meaning.

At Bonterra, this belief shapes how AI is built and delivered. The goal isn’t to introduce more tools or complexity, it’s to seamlessly embed AI into the workflows nonprofits already rely on. Whether it’s helping fundraisers segment donors more efficiently or guiding organizations toward stronger-fit grants, the mission stays the same:

Empower teams to spend less time on manual tasks and more time on work that moves their mission forward.

3. Oversight is the new innovation

If 2024 was the year of AI enthusiasm, 2025 must be the year of accountability.

The panel agreed that accountability can’t be outsourced. AI produces possibilities, not conclusions, and it’s the human review process that turns predictions into real decisions.

That’s why transparency is non-negotiable. Users deserve to know what data, prompts, and context shape every AI-generated result. Within Bonterra’s systems, those details are never hidden; they’re auditable, explainable, and subject to review.

It’s innovation with a conscience and it’s redefining what progress looks like in the social-good sector.

4. Building for the planet, not just the platform

Chief Information Security Officer Dan Seals introduced another dimension often missing from the AI ethics conversation: environmental responsibility.

Bonterra’s approach minimizes energy impact by using foundation models only for inference, not training. In simple terms, that means dramatically lower energy consumption, roughly equal to running a 60-watt light bulb for two days over an entire year of AI activity.

By hosting all AI features on AWS’s 100% renewable-energy infrastructure, Bonterra reduces its carbon footprint while maintaining the performance nonprofits rely on. Responsible innovation doesn’t stop at ethics or privacy, it extends to sustainability, too.

5. Designed for social good, guided by mission

In an era where many tech companies chase scale and speed, the Town Hall closed with a different north star: AI should serve the mission, never overshadow it.

Across the conversation and presentation, one idea stood firm: AI is a tool, not a replacement. Humans still shape purpose, context, and judgment.

That belief is reflected in how Bonterra builds. Every AI capability, from donor insights to grant opportunity scoring, is designed to deepen relationships and expand impact, not dilute it. The goal isn’t more output; it’s more meaningful outcomes.

This philosophy goes beyond positioning. It drives process and product decisions at every stage:

Every advancement is measured against one guiding question:

Does this make it easier for good people to do good work?

The future of ethical AI starts here

The Town Hall ended where it began, with collaboration. Technology alone won’t create trust, empathy, or impact. People will.

At Bonterra, we believe progress and responsibility are inseparable. Our commitment to ethical, human-led AI isn’t just about the tools we build; it’s about the trust we earn.

Want to be part of the conversation?

Work with Bonterra